Please stop calling this "frame interpolation"

AI has taken over the world of anime by storm with something that many people call frame interpolation, but as someone who has knowledge of the world of both 2D and 3D animation, I find this term an insulting misnomer. But why? And what name shall we use instead?

The reason has to do with the way that AI and other software process animated footage, what other animation techniques are used in the industry, and the results this so-called “technique” produces.

Explaining what’s going on

There are many videos circulating in YouTube about using AI to “remaster” anime in 4K resolution, and upscaling the framerate of an animation to 60fps. To many, this seems to look “smooth” —and yes, it looks fluid— but to the eyes of an animator, the sense of motion breaks.

Jujutsu Kaisen in 60fps (3:50)

One of the examples where the visual artifacts and motion inaccuracies are best noticeable. I encourage you to do pause the video between shots or abrupt changes.

It’s funny how the most popular examples are all fighting scenes. This all reminds me of this guy:

(By the way, watch Shirobako when you can, it’s an amazing anime about the Japanese animation industry)

The way this software works is like this: It first gets information from two frames from a video, and makes guesses to create the “frame in between” those two.

I won’t go into much detail about the inaccuracies, artifacts and just how awful the usual output of this software produces, as there is already a very illustrating video talking about this (although it does have some bias towards traditional animation), but it is bad enough to be considered unacceptable by animation standards.

So if we shun this kind of animation because it completely destroys the animator’s intentions, you will surely understand that this also shuns actual animator professionals once you realize the following…

”Frame interpolation” is already being used in the animation industry

There is an already existing technique used by many branches of the animation industry that uses interpolation, although it’s usually called by a different name: Tweening, and is fundamentally different than whatever the AI is doing:

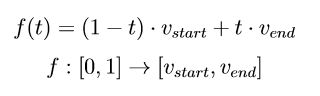

- It uses the power of math to accurately and consistently calculate where and how to move something instead of making guesses. And there is a simple underlying formula as the backbone behind it all: The linear interpolation formula.

- This technique is used during the animation making process and is fully controlled by the animator. The AI works on already finished footage, where animators have no control over.

While it’s true that AI still uses math, it’s significantly more complex and has little to do with what tweening is doing.

In the animation industry, this technique is the backbone behind pretty much every single 3D animation (even with heavily stylized works such as the latest LEGO movies, Spider-Man: Across the Spider-Verse and Puss in Boots: The Last Wish), and a type of 2D animation called puppet or cutout animation because of how it resembles paper cutout puppets.

Puppet animation in Bluey (0:21)

Though it's not shown in this video, the sock puppet asks Bluey —the main character: "How can you be sure you're not a puppet? [instead of a real person]" before the episode ends with a 4th wall break jokingly demonstrating the puppet animation process in the show.

Tweening is also used in other media like motion graphics, CGI, visual effects, and videogames, too! And I wouldn’t be surprised the vast majority of professionals would be included in the same bag as what the AI is doing, considering the differences between their workflow (and for now, the overall quality).

So what do we call this then? I came up with a much more suitable term for whatever the AI is doing, and it’s called:

Frame sampling

It clearly distinguishes itself from the tweening technique, and clearly conveys what it’s actually doing: It samples (takes bits of information) from frames of a video.

And I need to emphasize; yes, it’s a video, not animation. This is because the number of “new images” within an animation doesn’t necessarily match the number of frames in the video.

For example, there can be a new drawing every two frames. In animator’s jargon, it’s called animating on twos. Adding a new drawing every three frames is on threes, and so on. It’s also perfectly possible to have multiple areas of a video be animated in multiple ways, like the background panning on ones and characters walking on twos.

Western traditional animation is typically animated on twos, while anime tends to do it on twos or threes. You will rarely see something animated beyond fours because the illusion of motion tends to break at 8 drawings per second and lower.

Interpolated animations (with tweens) are usually handled every frame, so they are animated on ones. But this doesn’t have to be the case. Recent animated films like Into the Spiderverse and The Lego Movie tweaked the tweens so that they look animated on twos.

This effectively reduces the perceived framerate of the animation to a fraction of the base framerate (for something animated on twos at 24fps, it’d be like 12fps).

Comparison between animation on ones, twos and threes (0:27)

The video runs at a constant framerate of 24 frames per second.

Frame sampling completely disregards this, and assumes it’s all a constant “drawing” rate of all on ones, resulting in uneven and choppy animation (and oftentimes, lots of visual artifacts). And that’s not even taking into account things like easing and spacing. Unless the AI is able to determine all of these factors, it will always deliver choppy and bad animations.

On the other hand, tweening doesn’t need to take guesses, because the animator already provides the necessary information and context, and uses relatively simple math to remap the frames of an animation from 24fps to 60fps in an accurate fashion.

Videogames do this constantly! Whenever the framerate drops from the usual standard 60fps, thanks to interpolation, the games can automatically calculate how to display the following frames so that the animation still plays out as intended.

It’ll just look less fluid, but it’ll take the same amount of time to complete (though not always, that depends on certain factors within the game) and the motions will be the same.

“Frame sampling” is also used by video editing software (like Premiere Pro) other than just AI to talk about time interpolation, which is a different technique in on itself, but the overall concept is the same as what an AI does.

So from now on, I will talk about tweening as either itself or frame interpolation, while I will use frame sampling to talk about using an AI to upscale the framerate of an animation.